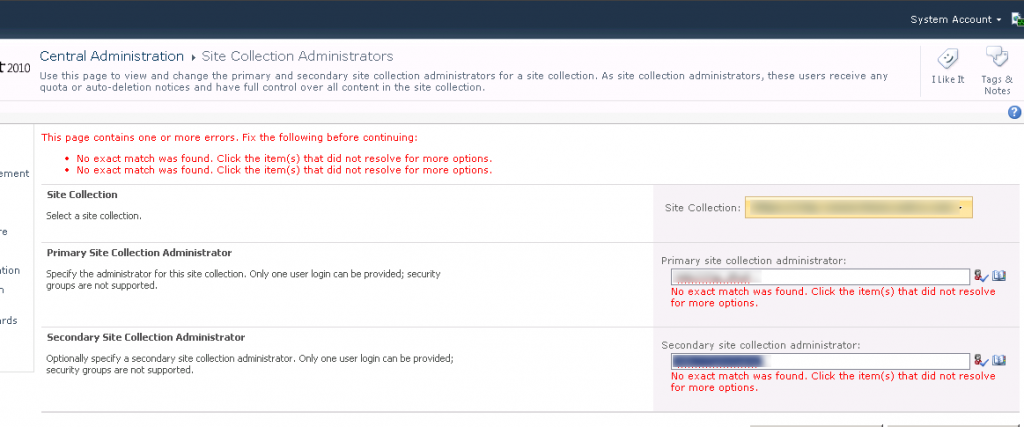

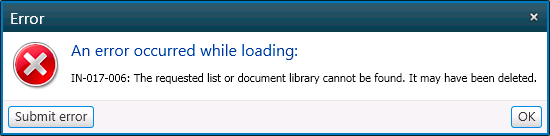

I’ve run into this error a few times in ShareGate-

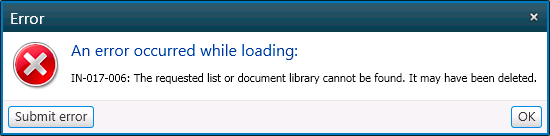

You try to open a Site Collection or web, and see this:

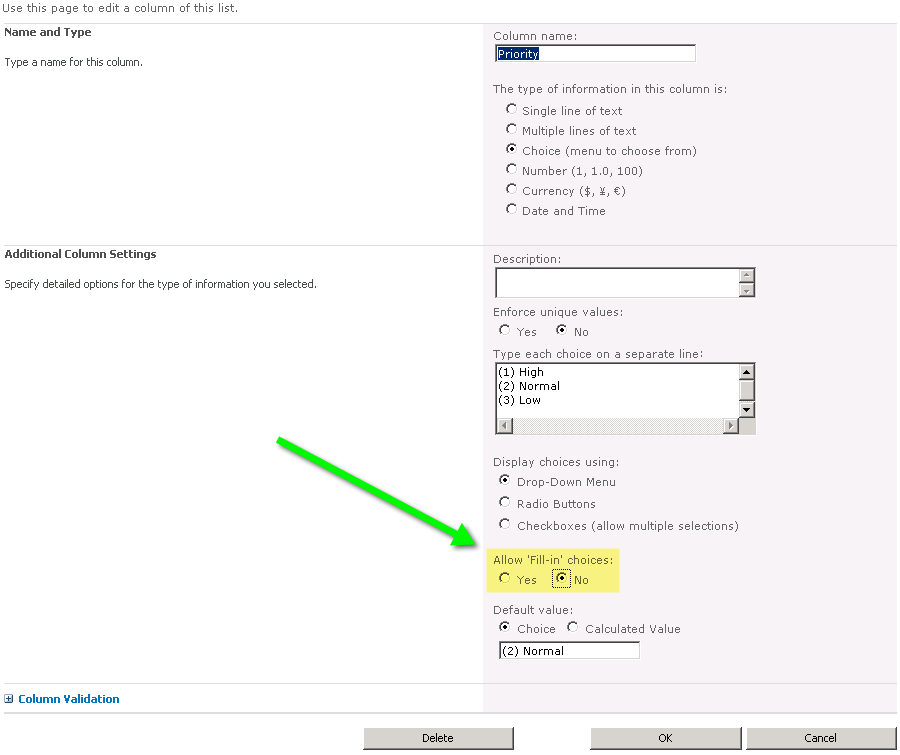

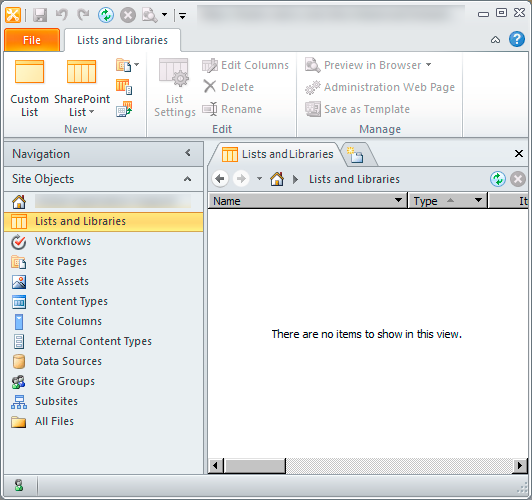

SharePoint Designer is not much help either:

The solution, after much trial and error, was actually pretty simple!

But before I get to the solution, lets look at some things I tried that led me to the solution…

I searched the term “there are no items to show in this view” for SharePoint Designer on the internet. This turned up a few possibilities – one being a corrupt /_vti_bin/Listdata.svc.

That wasn’t the case for me- some webs from the same site collection worked just fine – it was just one specific web that did not.

The other thing that turned up was the possibility of a corrupt list. Often due to a missing feature needed by the list.

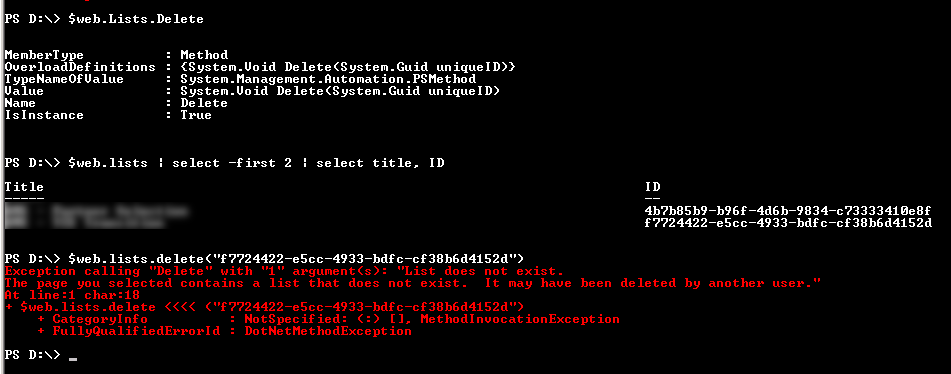

I turned to PowerShell and ran the following commands:

$web = get-spweb http://my.url.com/sites/mynoncooperatingweb

$web.lists

This started to return results, then errored out –

I was definitely on to something – there was something wrong with one of my lists.

Next I needed to figure out which one.

There are a few ways to do this – the easiest would likely have been to go to the website and choose “Site Actions”->”View all site content” and then just click on each one.

I didn’t do that.

Instead I mucked with PowerShell some more, I was able to tell from the error when I ran my above code which list was causing the problem.

My next step was to see if there was any data in the list.

Based on “Site Actions”->”View all site content” , it had an item count of zero, but I wasn’t sure I could trust this given the list was corrupt.

So I turned to SQL – I knew which Database it was in. (Get-SPContentDatabase -site $url will tell you this)

Select Top 1000 *

From AllDocs

Where

DirName like 'url/to/my/corrupt/document/library/%'

Order By Dirname

This listed a few results, but the results looked like what is usually found in the Forms folder – I didn’t for example, see anything at all that looked like end user data or documentation.

Armed with this re-assurance that the list was not needed, it seemed the easiest way forward was just to delete the list – this would be easy – or so I thought….

I didn’t have any hopes of deleting the list through the UI, (though to be clear, it did show up under “Site Actions”->”View all site content” and clicking on that threw an error)

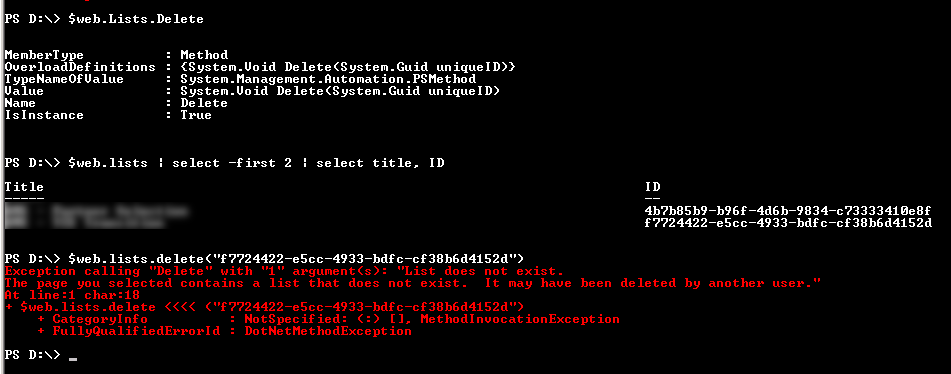

So I again turned to PowerShell:

I saw a delete method, so I called it without the ( ) to see what it was looking for, It needed a GUID.

I ran the command shown below to get a list of ID’s from my site – in my case, the second list was the corrupt one.

Then I put that guid in the delete function as shown: and got the error as shown:

“List does not exists”

So that didn’t work.

Next I searched the internet for more information –

I found a post that mentioned the recycle bin. Ah Ha!

I looked at “Site Actions”->”View all site content” and then the recycle bin, which of course was empty.

So I went to Site Actions -> Site Settings -> then went to “Go To Top Level Site Settings” -> then Site Collection Administration(heading) ->Recycle Bin.

After sorting by URL, I found the corrupt list there.

First I tried restoring the list, it threw an error.

Then I tried to delete the list – that worked, and put the item in the Second stage recycle bin.

Next I went to the 2nd Stage “Deleted from end user Recycle Bin” area and deleted it from there.

Back in Site actions -> “View all site content” the list no longer showed up.

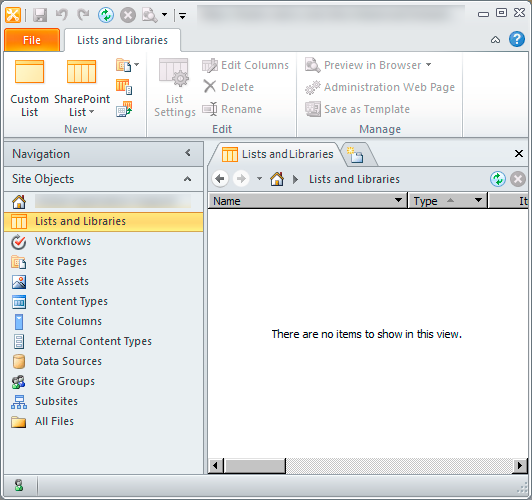

I relaunched SharePoint Designer and I am again able to bring up the list of lists and libraries.

I again tried a migration in ShareGate and it’s purring along like a kitten.

So to make my long story short, the error in ShareGate was caused by a corrupt/broken list – this same error affected SharePoint Designer (they both presumably use the same web service interface to get information from SharePoint) and to a degree, it even affected looking at the lists in PowerShell.

I suspect there is a bug somewhere, but I suppose it’s possible the user hit delete at the exact instance the application pool restarted or something like that.

At the time this happened we were running SP2010 SP1 + June 2011 CU – if you run across a similar situation, please leave a comment with your version of SharePoint, hopefully this is addressed in SP2!

– Jack